New Publication! Presenting the XAI Novice Question Bank – a catalogue of lay people’s information needs about AI systems

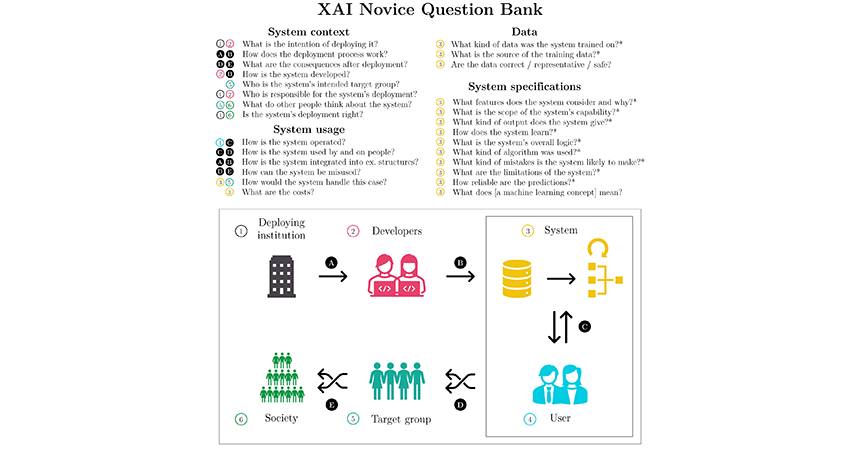

We are happy to announce that Timothée Schmude's paper was accepted to the International Journal of Human-Computer Studies! In this work, we present the "XAI Novice Question Bank," an extension of the XAI Question Bank (Liao et al., 2020), which catalogs the information needs of AI novices in employment prediction and health monitoring. The catalog focuses on areas such as data, system context, usage, and specifications, based on task-based interviews where participants asked questions and received explanations about AI systems.

AI systems that make decisions about people affect a range of stakeholders, but explanations of these systems often overlook the needs of AI novices. With this work we aim to address the gap between the information provided and the information needed by those impacted, such as domain experts and decision subjects.

Our analysis revealed that explanations improved participants' confidence and revealed challenges in understanding the systems, such as difficulties in finding information and assessing their own comprehension. Participants’ prior perceptions of system risks influenced their questions, with high-risk concerns prompting questions about system intent, and low-risk perceptions leading to questions about system operation. We outline five key implications to guide the design of future AI explanations for lay audiences.

Information that matters: Exploring information needs of people affected by algorithmic decisions

Timothée Schmude, Laura Koesten, Torsten Möller, Sebastian Tschiatschek In: International Journal of Human-Computer Studies, Volume 193, 2025. https://doi.org/10.1016/j.ijhcs.2024.103380

Keywords: Explainable AI; Understanding; Information needs; Affected stakeholders; Question-driven explanations; Qualitative methods